Data exploration¶

Let us first take a look at the data using Pandas. Note that we use the preprocessed, cleaned data from PhilippMaxx.

path = 'data/clean_train.txt'

df = open_data(path)

df.head()

df.shape

As you can see we are dealing with very unformal language, typos, and bad grammar. Moreoever, we are given an unbalanced dataset:

df['label'].value_counts()

Data Transforms¶

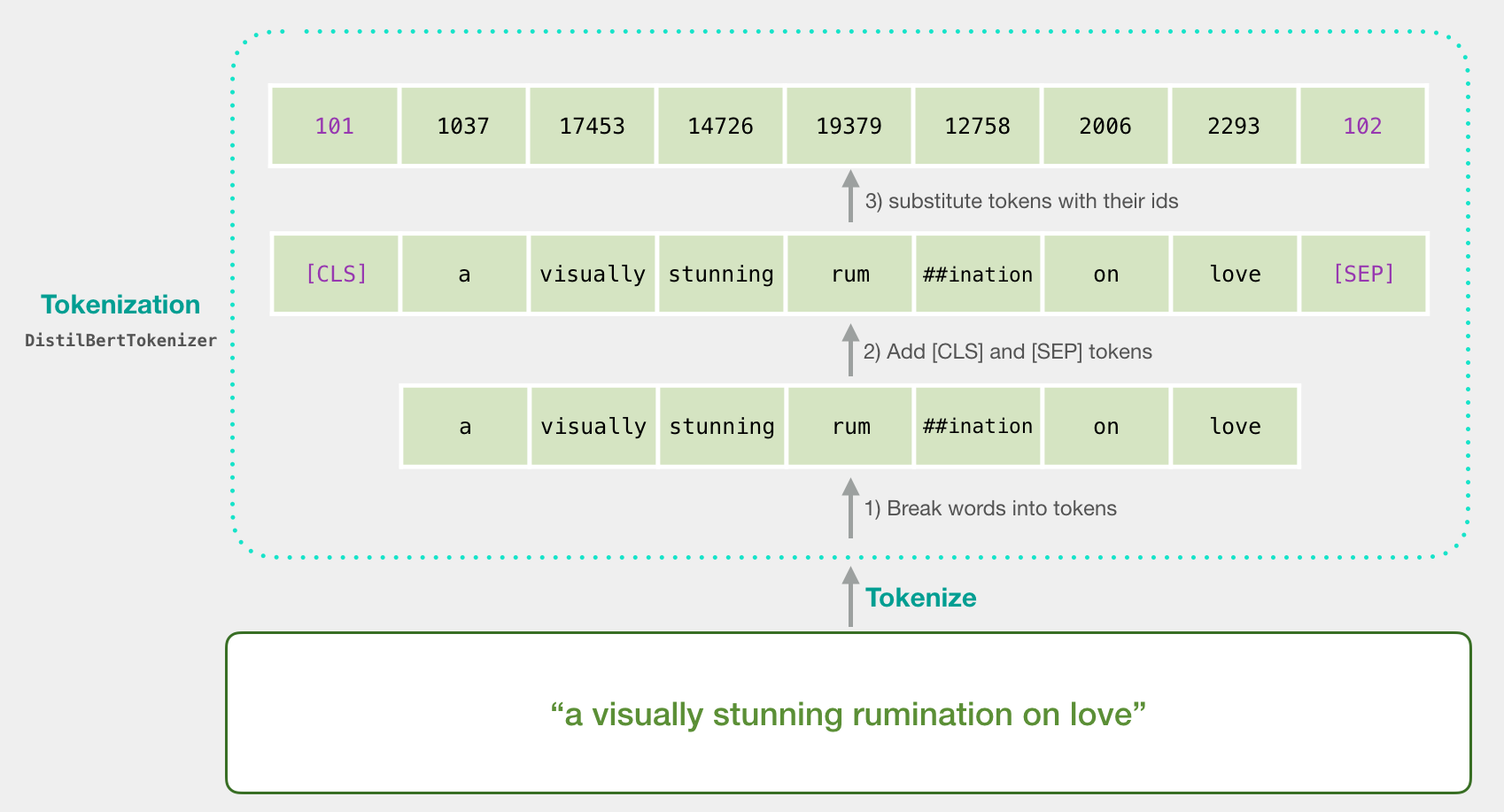

We now tokenize the examples into features and create attention masks according to the (Distil)Bert standard. The following image from Jay Alammar's great blog post A Visual Guide to Using BERT for the First Time visualizes the tokenization step.

max_seq_len = 10

padded, attention_mask = transform_data(df, max_seq_len)

assert padded.shape == attention_mask.shape == df[['turn1','turn2','turn3']].shape + (max_seq_len,)

Lets digest the outcome for the first two conversations.

df[:2]

The utterances are transformed according to the tokenizer vocabulary

vocab = np.array(list(DistilBertTokenizer.from_pretrained('distilbert-base-uncased').vocab.items()))

vocab[padded[:2]].reshape(2,3,-1)

...resulting in the corresponding input ids (padded with zeros to max_seq_len)

padded[:2]

...and masks for the self-attention layers specifying the length of each utterance:

attention_mask[:2]

We also transform the labels to integers according to a given dictionary emo_dict.

emo_dict = {'others': 0, 'sad': 1, 'angry': 2, 'happy': 3}

labels = get_labels(df, emo_dict)

Let us look at the result for our two conversations from above:

labels[:2]

The following widget allows to interactively explore the former data transformations.

PyTorch Dataloader¶

Finally we aggregate all the functions above into a PyTorch dataloader (with optional distributed training).

batch_size = 5

loader = dataloader(path, max_seq_len, batch_size, emo_dict)

A batch consists of batch_size input ids, attention_masks, and optionally the corresponding labels.

batch = next(iter(loader))

batch

We can find, for instance, the first conversation of this batch in our input ids above.

conversation = batch[0][0]

assert torch.any(torch.all(torch.all(conversation == padded, dim=2), dim=1)) == True